Introduction to Autonomous Robots and Ethics

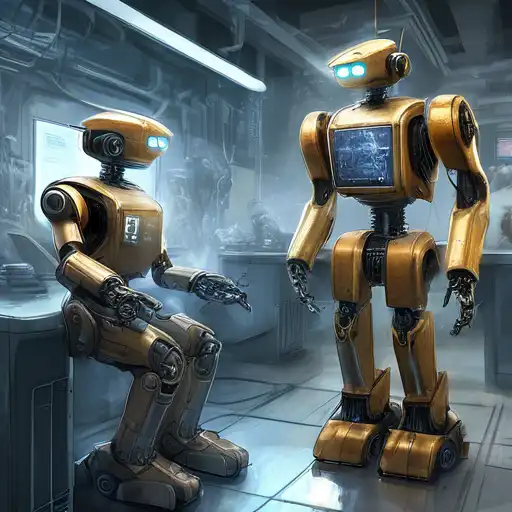

The advent of autonomous robots has ushered in a new era of technological advancement, raising profound ethical questions. These self-operating machines, capable of performing tasks without human intervention, are increasingly prevalent in various sectors, from manufacturing to healthcare. As we integrate these robots into our daily lives, it's imperative to consider the ethical implications of their autonomy.

The Core Ethical Dilemmas

Autonomous robots present several ethical dilemmas, including accountability, privacy, and the potential for harm. Who is responsible when an autonomous robot makes a decision that leads to negative consequences? How do we ensure these machines respect individual privacy? These questions underscore the need for a robust ethical framework to guide the development and deployment of autonomous robots.

Accountability and Responsibility

One of the most pressing issues is determining accountability in the event of malfunctions or unintended actions. Unlike traditional machines, autonomous robots can make decisions based on complex algorithms and data inputs, blurring the lines of responsibility between developers, operators, and the machines themselves.

Privacy Concerns

With their ability to collect and process vast amounts of data, autonomous robots pose significant privacy risks. Ensuring that these machines adhere to strict data protection standards is crucial to safeguarding individual privacy rights.

Developing Ethical Guidelines

To address these ethical challenges, it's essential to develop comprehensive guidelines that govern the design, programming, and operation of autonomous robots. These guidelines should prioritize human welfare, transparency, and accountability, ensuring that robots serve the greater good without compromising ethical standards.

Transparency in Decision-Making

Ensuring that the decision-making processes of autonomous robots are transparent is vital for building trust and accountability. Stakeholders must have access to information about how these machines operate and make decisions, allowing for oversight and intervention when necessary.

Prioritizing Human Welfare

At the heart of ethical guidelines should be the principle of prioritizing human welfare. Autonomous robots should be designed to enhance human life, not replace or harm it. This includes implementing safeguards to prevent misuse and ensuring that robots operate within ethical boundaries.

Conclusion

The ethics of autonomous robots is a complex and evolving field that requires ongoing dialogue among technologists, ethicists, policymakers, and the public. By addressing these ethical challenges head-on, we can harness the benefits of autonomous robots while minimizing potential harms. As we move forward, it's crucial to remain vigilant, ensuring that these technologies are developed and used in a manner that aligns with our collective values and ethical standards.

For further reading on related topics, explore our articles on Artificial Intelligence and Machine Learning.